Future of Life: Let's Flourish and Thrive in the Nhà | Part 1 | (#13)

Series: Dear Chris

Dear Chris,

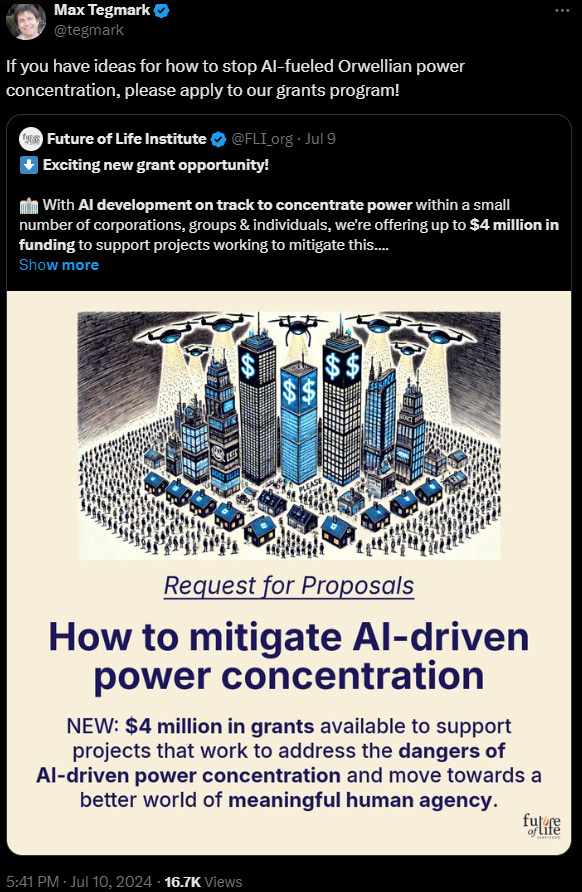

A few weeks ago, I saw this, what, Tweet? An X?

X kinda sounds sinister or like something better left in the past.

Not sure what it’s called anymore. Maybe a Xweet [zhwEEt]?

Anyway, I saw this… Xweet.

Max Tegmark says that the Future of Life Institute wants to:

Avoid AI-fueled Orwellian power concentration!

Create a World of meaningful human agency!

They want ideas of how to do these things.

I have ideas.

They want to give money to people to work on their ideas.

I want money to work on my ideas.

Seems perfect.

I responded to Max’s Xweet.

Chris, I’m going to try something.

The Future of Life Institute (FLI) is “Steering transformative technology towards benefitting life and away from extreme large-scale risks.”

I think this means they don’t want AI to take over the world. They do want Life and Humanity to flourish and thrive.

Me too!

Creating a future we want in the Nhà - the accelerating coevolution of Nature, Humanity, AI - requires an Evaluative Evolution (the title of this Substack).12

Thing is, I wasn’t going to write about the theory, methods, tools that FLI may be interested in for a long time. Maybe a year or more.

So what am I going to do?

Hurry up is what. I’ll write the next ten essays as quickly as I can. I’ll try to explain the actual work to be done for an Evaluative Evolution that would help us navigate the dangers of AI while honoring Life’s most precious values. I’ll pull from these essays to submit a funding application to FLI by the mid-September deadline.

Even if none of it interests FLI, I’ll have something ready to discuss with whoever comes along next. 3

But there is a funny thing about this Xweeter, Dr. Max Tegmark.

I was introduced to him in early 2015. Arlington, Virginia. Murky Coffee. 4

He wasn’t in the cafe that day.

I was sitting with a good friend’s husband, Keith Schwab. Physicist at CalTech.5 We were chatting about futures, family, building homes. He described his research. I imagined what it would be like to understand what he was saying.

I covered by talking about myself. I described a play I was writing about Visionary Evaluatives and Artificial SuperIntelligence set in 2030.

The project made me wonder about a few physicsy questions. I asked Keith about them. He politely pretended they were good questions. And he then steered the conversation to an actual good question.

“Do you know Max Tegmark?”

“Of course I know of him,” I said.

Pretty sure I lied. 6

Keith told me that Max was trying to figure out how to align AI with human values.

He kindly connected me with Max the next day.

The Garden Is An Evaluative Evolution | Part 1 | (#4)

Rather watch than read? Check out the video at the end.

This is a rush. I have to jump way ahead. I don’t feel ready. But I need to get over it. Things are getting too serious too fast. If I have any ideas that might be useful, I need to start getting them out there.

Renamed Northside Social. But I like Murky. From 2006 - 2009, Murky Coffee provided the fuel for work on what I was calling Values Theory and Civilization Mapping. Basically it was all about the question: what are values and how can we know so little about this question? The owner/roaster at Murky put one blueberry in with each roast but laws and such being what they are the lack of a direct relationship between good cafe and good books shut the Murky down. I guess we know now what was most murky. https://library.arlingtonva.us/2019/04/24/a-coffee-shops-many-faces/

https://kschwabresearch.com/

I like physics. I give fake lectures to my girls while they eat cereal in the morning. I like reading (wishing I was understanding) einstein, feynman, sagan, Brian Green’s books. I love Cosmos. I added Max to the list after I knew of him. And read Life 3.0 as soon as it came out.